February 2026 Newsletter, Volume 208

Mar. 5th, 2026 01:43 pmI. INTERNATIONAL FANWORKS DAY

On February 15, Communications coordinated many International Fanworks Day (IFD) activities, including a Feedback Fest highlighting fanwork recommendations, an editing challenge in conjunction with Fanlore, and an IFD Discord server with games and chatting. Additionally, Translation helped make IFD materials available in 22 languages. Thank you to everyone who joined us in celebrating!

II. ARCHIVE OF OUR OWN

In February, we celebrated AO3 reaching 10 million registered users! \o/

Accessibility, Design & Technology (AD&T) focused on some important upgrades and bug fixes, including upgrading to Ruby on Rails 8 and improving the collection revealing process. They also published release notes for December’s code changes.

AO3 Documentation began their biannual review of user-facing documentation.

In the past month, Open Doors signed five new agreements with moderators to import their archives to AO3! Fandoms include Highlander, The Magnificent Seven, My Chemical Romance, and others. They also completed the import of Slashknot, a Slipknot (band) fanfiction and fanart archive.

In January, Support received 3,811 tickets, while Policy & Abuse (PAC) received 7,972 tickets. User Response Translation completed 12 requests from PAC and 37 requests from Support. PAC continues to work closely with AD&T and Systems to combat spam that users have been experiencing across the site.

Tag Wrangling announced 28 new “No Fandom” canonical tags for February. In January, they wrangled over 648,000 tags, or around 1,400 tags per wrangling volunteer.

III. ELSEWHERE AT THE OTW

Fanlore ran a Femslash February monthly editing challenge! Systems also helped upgrade Fanlore to a new version of MediaWiki.

In February, Legal had one of their volunteers participate in a briefing for staffers in the U.S. Legislature to gain a deeper understanding of copyright fair use. Elsewhere, Legal answered a number of questions internally and from users.

TWC is preparing their March 2026 issue on “Gaming Fandom” for publication. They also completed an update of TWC’s editorial board as part of their ongoing work to expand TWC’s scope, diversify their discipline in terms of historically marginalized fans and scholars, make the journal more international in scope, and increase multimodal approaches.

IV. GOVERNANCE

Board has concluded all Board-committee check-ins and is reviewing key themes across the organization. They also voted to approve an interpretative rule of one bylaw to better accommodate any future Board members with hearing disabilities.

Board Assistants Team continued work on various projects, including revamping the OTW Board Discord and researching projects on volunteer retention, public meeting best practices, and volunteer mental health.

Organizational Culture Roadmap continued work on the OTW Code of Conduct update project by finishing a summary of internal survey results and adjusting Code of Conduct drafts based on recommendations from an external HR firm. The OTW Crisis Management Plan has been finalised and approved by the Board.

V. OUR VOLUNTEERS

In February, Volunteers & Recruiting ran recruitment for seven roles across four committees and one workgroup.

From January 23 to February 21, Volunteers & Recruiting received 182 new requests and completed 295, leaving them with 61 open requests (including induction and removal tasks listed below). As of February 21, 2026, the OTW has 985 volunteers. \o/ Recent personnel movements are listed below.

New Communications Volunteers: 3 Social Media Moderators

New Translation Volunteers: 1 Volunteer Manager and 1 Translator

New Volunteers & Recruiting Volunteers: corr and peaandsea (Chair Assistants) and 1 Volunteer

Departing Committee Chairs/Leads: Elizabeth Wiltshire (Organizational Culture Roadmap Head) and 1 Elections Chair

Departing AO3 Documentation Volunteers: 1 Editor

Departing Communications Volunteers: Abby (Social Media Moderator) and 2 Weibo Moderators

Departing Elections Volunteers: 1 Voting Process Architect

Departing Open Doors Volunteers: Mei and 2 other Import Assistants, and 1 Chair Assistant

Departing Support Volunteers: Mily and RRHand (Volunteers)

Departing Tag Wrangling Volunteers: Indes, lifeisyetfair, PinkBrain, plantpun, and 14 other Tag Wrangling Volunteers

Departing Translation Volunteers: Idiosincrasy (Volunteer Manager and Translator), 3 Volunteer Managers, and 1 Translator

Departing Volunteers & Recruiting Volunteers: corr, peaandsea, and 1 other Senior Volunteer; and 2 Volunteers

For more information about our committees and their regular activities, you can refer to the committee pages on our website.

The Organization for Transformative Works is the non-profit parent organization of multiple projects including Archive of Our Own, Fanlore, Open Doors, OTW Legal Advocacy, and Transformative Works and Cultures. We are a fan-run, donor-supported organization staffed by volunteers. Find out more about us on our website.

General Gory?: JUSTICE LEAGUE AMERICA #48-49 (JLI 65)

Mar. 5th, 2026 06:08 am

Warning for lots of Nazi and Hitler imagery.

In a meta sense, the real threat to a figure like General Glory isn’t Nazis; it’s disillusionment, the vision of America with bloodied hands that can never be made clean. The General will face both threats in these pages, and he’s much more able to address one than the other. And this was produced during the early Nineties, with reference to the early Forties, which were both relatively good times for American patriotism. [Glances at headlines, shakes head, sighs]

( The Vietnam and Trump eras have been hard enough on Captain America; I’d rather not imagine the General trying to cope with them. )

Gumdrop, a silly app for messing with my webcam

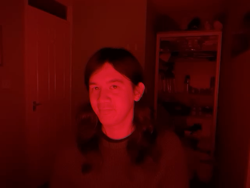

Mar. 5th, 2026 08:58 amDuring the COVID lockdowns, I spent long evenings at home on my own, and I amused myself by dressing up in extravagant and glamorous clothing. One dark night, I realised I could use my home working setup to have some fun, with just a webcam and a monitor.

I turned off every light in my office, cranked up my monitor to max brightness, then I changed the colour on the screen to turn my room red or green or pink. Despite the terrible image quality, I enjoyed looking at myself in the webcam as my outfits took on a vivid new hue.

Here are three pictures with my current office lit up in different colours, each with a distinct vibe:

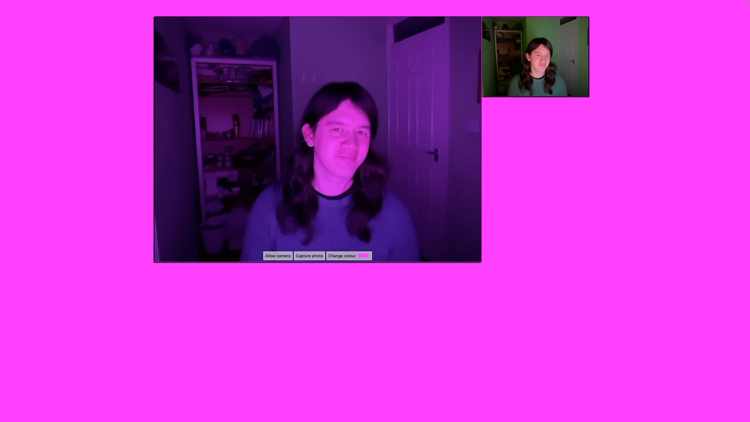

For a while I was using Keynote to change my screen colour, and Photo Booth to use the webcam. It worked, but juggling two apps was clunky, and a bunch of the screen was taken up with toolbars or UI that diluted the colour.

To make it easier, I built a tiny web app that helps me take these silly pictures. It’s mostly a solid colour background, with a small preview from the webcam, and buttons to take a picture or change the background colour. It’s a fun little toy, and it’s lived on my desktop ever since.

Here’s a screenshot:

If you want to play with it yourself, turn out the lights, crank up the screen brightness, and visit alexwlchan.net/fun-stuff/gumdrop.html. All the camera processing runs locally, so the webcam feed is completely private – your pictures are never sent to me or my server.

The picture quality on my webcam is atrocious, even more so in a poorly-lit room, but that’s all part of the fun. One thing I discovered is that I prefer this with my desktop webcam rather than my iPhone – the iPhone is a better camera, but it does more aggressive colour correction. That makes the pictures less goofy, which defeats the purpose!

I’m not going to explain how the code works – most of it comes from an MDN tutorial which explains how to use a webcam from an HTML page, so I’d recommend reading that.

I don’t play dress up as much as I used to, but on occasion I’ll still break it out and amuse myself by seeing what I look like in deep blue, or vivid green, or hot pink. It’s also how I took one of my favourite pictures of myself, a witchy vibe I’d love to capture more often:

Computers can be used for serious work, but they can do silly stuff as well.

[If the formatting of this post looks odd in your feed reader, visit the original article]

Just One Thing (05 March 2026)

Mar. 5th, 2026 08:02 amComment with Just One Thing you've accomplished in the last 24 hours or so. It doesn't have to be a hard thing, or even a thing that you think is particularly awesome. Just a thing that you did.

Feel free to share more than one thing if you're feeling particularly accomplished! Extra credit: find someone in the comments and give them props for what they achieved!

Nothing is too big, too small, too strange or too cryptic. And in case you'd rather do this in private, anonymous comments are screened. I will only unscreen if you ask me to.

Go!

FFFX Post-Deadline PHs

Mar. 5th, 2026 08:47 pmWe've passed the deadline and have 5 remaining pinch hits, mostly requiring a half-length gift of 5,000+ words (fic) or 20+ panels (comic art).

Please take a look if you think you might be able to post a gift of this kind by 11:59pm EDT, Thursday 19 March.

My participants and I are very grateful for your interest!

Pinch hit #32 - fic - Star Trek: Strange New Worlds (TV), Murder She Wrote, Jem and the Holograms (Cartoon), G.I. Joe (Cartoon), Voltron: Lion Force (1984)

Pinch hit #39 - fic - Stargate Atlantis, Kolja | Kolya (1996), Cesta do pravěku | Journey to the Beginning of Time (1955), Jurassic Park Original Trilogy (Movies)

Pinch hit #62 - art, fic - 少年歌行 | The Blood of Youth (Live Action TV), 莲花楼 | Mysterious Lotus Casebook (TV), 琅琊榜 | Nirvana in Fire (TV), 伪装者 | The Disguiser (TV), 少年白马醉春风 | Dashing Youth (Live Action TV), 杀破狼 | Sha Po Lang - priest )

Pinch hit #65 - fic - Columbo, Criminal Minds (US TV), Grey's Anatomy, Miss Marple - Agatha Christie, NCIS: Los Angeles, SEAL Team (TV), Sherlock (TV) The Professionals (TV 1977)

PH #67 - art, fic [varies by request] - Clair Obscur: Expedition 33 (Video Game), Original Work, Crossover Fandom [Brooklyn 99 & The Labyrinth], Hades (Supergiant Games Video Games)

Books

Mar. 5th, 2026 12:25 amAward-Winning Fantasy Novels of 2025

Mid-Year Reading Roundup: Best Fantasy Books of 2025 (Jan-June) You Absolutely Need to Add to Your TBR

Readers' Favorite Fantasy -- Goodreads

Links: Small steps to resist

Mar. 4th, 2026 09:45 pmResist and Unsubscribe. Unsubscribe from services that support fascism. Every little bit helps! I didn't subscribe to any of these things in the first place, so I guess I've been resisting all along.

Taking action against AI harms by Anil Dash. Speaking can help get businesses off X and schools off ChatGPT.

It’s five answers to five questions. Here we go…

1. I’ve run out of a patience with a rude coworker

I’ve run out of patience with a difficult coworker, Mary. I’m one of the few people who has to deal with Mary in person, and my work is closely tied with hers. She’s entry-level while I’m mid-level, but I’m not her manager or supervisor.

She has difficulty completing her work, which causes many problems for her. I have tried mightily to be her friend and mentor for the past few years, but her struggles continue. We’re locked in a difficult dynamic where I have to sit back and watch her flail, and I bear the brunt of her complaints. On a personal level, most people find her to be entitled, high maintenance, and impossible to please. She lashes out at people frequently, and today she stormed into my office following a completely normal interaction to call me rude, offensive, and dismissive. This is very common.

I’m not a confrontational person so I just take it on the chin and try to get on with my day. Over time, I’ve worked on being direct with her, setting boundaries, and learning how she wants to be communicated with. I’ve reported her to her manager and to HR multiple times, and she’s been put on performance improvement plans. Things improve for a time, then we’re right back in the same place.

Any advice to improve this situation? It’s impacting my work and my mental health. I’m worried that one day I’m going to snap and unleash years of frustration on her.

The biggest issue here is Mary’s manager, who apparently isn’t willing to deal with the situation in a way that gets it resolved. Putting someone on multiple performance improvement plans is ridiculous; the first one should have come with a clear statement that the improvement needed to be permanently sustained and if she backslid once it was over, they wouldn’t start the process all over again.

You’re limited in what you can do yourself, but at a minimum you can cut Mary off from constantly complaining to you and can leave the room if she’s being rude to you — and you should give up on trying to be her friend and mentor, because that’s not working and apparently just gets you more exposure to her rudeness (along with storming into your office). Stop trying to help someone who doesn’t appreciate it and is abusive to you.

You can also continue to report the issues you encounter with her to her boss and HR; make it less comfortable for them to keep ignoring the situation. And transfer the unpleasantness of dealing with Mary over to her manager as much as possible — meaning that if she’s not doing her work, rather than talking to her about it, take it to her manager (“I need X from Mary and don’t have it; can you please ensure I get it?”) and if she sends you rude messages, forward them to her boss with a note like, “Can you please address?” If you transfer the burden of dealing with Mary more to Mary’s boss, she might eventually be moved to act more decisively.

Related:

how to deal with a coworker who’s rude to you

2. “I forgive you” in a professional situation

I teach part-time at a university with ties to a Christian denomination, although I’m not Christian. The administration is pretty laid back, but the students are required to attend religious instruction/events weekly.

I made a remark in class within the context of the lesson that a student interpreted as meaning that I was applauding the fact that a police officer has been killed. In fact, I was indicating that the assailant had been caught.

The student walked out of class but did not make an issue of it. He came back and after class, he spoke with me alone and said he was very upset by what he thought he’d heard me say because his father was a police officer. I explained what I had meant and apologized that it came out incorrectly and that he had been upset by it. He responded, “I forgive you.”

I was taken aback by that and just thanked him. During the next class meeting, I apologized to the whole class and clarified what I had meant. No one else seemed to have noticed.

Part of what we teach in the classroom is professionalism. If the student had said he forgave me in a work context, I would have felt that was out of line. At a Christian university, I still didn’t think it was appropriate, but should I have told him not to say that in a workplace?

I talked with someone afterward who pointed out that “I forgive you” was heaps better than some other things the student could have said, which is true. He could done or said any number of other things that would have been problematic. Should I have instructed him — or the whole class without calling him out specifically — about how to accept an apology professionally?

I’d let it go. “I forgive you” would be weird in a professional setting, but you’re better off leaving the entire incident in the past rather than reopening it and risking him making a bigger deal out of it. This incident is just not well suited for turning it into a teachable moment, because it could backfire on you in ways you don’t intend.

For what it’s worth, I’m also not a fan of turning every small thing into a lesson about professionalism; sometimes the better part of professionalism is just giving people grace for not getting something quite right. You didn’t speak perfectly (it sounds like), he didn’t speak perfectly, and you can both allow for the other being a human who doesn’t always get things exactly right and just move on.

3. My old colleague recruited me for a job, then rejected me

Last summer I had lunch with a former colleague with whom I worked successfully for many years. She revealed that a) she’d been promoted to vice president of my former division and b) she wanted me to come back. I agreed, contingent upon the conditions of the return.

Months passed before she could create a position — this company is very bureaucratic — and when she did, it turned out the hiring manager was another former colleague with whom I worked successfully. He met with me privately to sell me on taking this new position, but there was a catch: I had to interview just like anyone else. I agreed.

Four interviews later, I was rejected for the job, the reason being that it was felt I was not quite ready for the position. I felt a little blindsided, yes, but my husband was furious and wondered why I was not. He said, “They asked you to return, they persuaded you to take the job, then they rejected you? They knew your abilities when they asked — what is wrong with them?” He thinks I have been ill used, and I might agree. Is my husband right, or is this just a normal, unfortunate situation?

I understand why you’re frustrated — they wooed you for the position — but it does sound like the hiring manager was straightforward with you that you’d need to compete for it and it wouldn’t just be handed to you.

That said, their reasoning of “you’re not quite ready for the role” is pretty aggravating since that’s something they should have been able to figure out earlier on in the process or — if it really didn’t become apparent until a specific role was created and you were interviewing for it, which is possible — they should have given you different feedback, more along the lines of “we were hoping this position would be a good match because of ABC but as we went through the process, we realized that it’s going to require someone with more XYZ.” And ideally the vice president who originally said she wanted you to come back should have reached back out to you to say something like, “This turned out not to be the right role, but I’d still really love to get you over here so let’s talk about what could be a stronger match.”

So I think fury is excessive, but it’s reasonable to be extremely irritated at how they handled it.

4. Applying for on-site jobs when I can’t drive at night

What are your thoughts on applying for hybrid jobs or jobs that don’t advertise as being remote when the commute could be an issue? I can legally drive at night, but I won’t because my vision is so poor that I am no longer comfortable doing it. In my mid-sized city, public transit is awful, so I can’t easily get anywhere with it.

The Job Accommodation Network seems to say the Americans with Disabilities Act (ADA) would cover the interactive process for your commute if you were already hired, but I’m not even 100% on that.

I can find places I’d like to work that are across the city (and I own a house, so moving isn’t an option), and I don’t want people to think I’m ignoring the rules just to ignore their return-to-office mandate (even though I do think it is dumb), but for example, a 40-minute drive to cross the city takes 2.5 hours via two buses and an hour walk to a corporate location that I’ve heard is awesome to work for, and I can name a lot of places like that. Otherwise I’m stuck to the downtown corridor which is fine, but that’s all banking (yuck … been there, done it, and no). I’m currently fully remote for a local downtown law firm but trying to stomach working for the next 30 years and unsure how to handle it.

Employers are required to make the same accommodations for potential hires that they’d make for existing employees; there’s no category of “yes, we have to do it if you’re already working here, but we don’t have to offer it before you start.” It’s something that would be appropriate to raise and negotiate as part of your offer. (And yes, the ADA does require them try to find an accommodation if it can be done without undue hardship; in this case, that might be a schedule that allows you to commute home before nightfall.)

5. Should I include union organizing work on my resume?

I am looking to move out of my current organization and maybe make a bit of a career shift. A lot of the skills and experience that would make me a strong candidate for many of the jobs I’m looking at are not from my current job itself, but from the work I do here as a union organizer and steward. I was a lead organizer in the union effort and then, once the union was authorized, a part of the bargaining committee for our first contract — so I developed and exhibited lots of communication skills, leadership, project management, negotiation skills, you name it.

I’m really proud of this work and would love to include it on my resume, but I imagine that most hiring managers wouldn’t be too keen on hiring a union organizer, especially if they thought I might try to also unionize my next workplace (and they wouldn’t necessarily be wrong to assume that). Is there a way to include this experience in my job applications? Maybe I save it for an in person interview, or mention just the bargaining committee work but not the organizing work, or somehow talk about the experience without mentioning that it was for my union…? Or is it safer to just leave it all off entirely, even if it means I may not appear as a of strong candidate?

Yeah, the organizing work in particular will hurt you with some managers, who won’t want to invite a union organizer on to their staff. Others won’t care and will see the value in the leadership skills involved. All else being equal, I’d leave the organizing work off; the bargaining committee work is safer to include, especially if you can frame it as working collaboratively with management rather than adversarially.

The other way to look at this is that maybe you’d be happy to screen out employers who’d have a problem with the organizing work … but that depends a lot on how in-demand you expect to be as a candidate.

The post I’ve run out of a patience with a rude coworker, “I forgive you” in a professional situation, and more appeared first on Ask a Manager.

more about the New Orleans trip

Mar. 4th, 2026 10:56 pmI did more walking each day, including but not only the travel days, than I expected or planned, and found it less difficult than I would have predicted.

Saturday afternoon we met my brother at House of Blues, because they had outdoor music and a performer he liked. That was fun, and Adrian enjoyed dancing with an enthusiastic stranger. I think that was the day we took a streetcar downtown in search of lunch, only to find lines for the relatively small number of places with outdoor seating. But I'd wanted to ride a streetcar--streetcars are part of the New Orleans transit network, not just a tourist attraction, so we could get one a couple of blocks from our hotel.

Our hotel had a courtyard, which was part of why Cattitude chose it. The courtyard had an unexpected, charming cat. The drum circle I mentioned in the previous post was in the park across the street from our hotel, which is part of why Mark recommended it.

Also, the New Orleans airport terminal plays music, not very loudly, over the PA system, which is entirely fitting for an airport named after Louis Armstrong, and much better than what comes over the PA at most airports.

how do we push back as a group when we’re all remote?

Mar. 4th, 2026 06:59 pmA reader writes:

My fully remote company just announced that our mandatory, weekly, hour-long, all-staff Zoom meeting will now be required to be camera on and mic on for all 60+ attendees. It seems like they’re trying to recreate the feeling of us all being in person. However, to me, and to I imagine a lot of people, the new requirements sound like literal torture.

This seems like a perfect “push back as a group” situation … but I don’t know how to do that in a remote setting. While I suspect my manager would also find this new requirement bonkers, I’m not so sure about his boss. I’m mostly an independent contributor and I’m not at a manager level, so I don’t have much incidental interaction with other people in the company.

What can I do here? Reach out to a handful of individuals on Teams to see if others think this is as insane as it seems to me? Then what? Write a group Teams message to the meeting leader saying, “I understand you want the company to feel closer, but we are not doing this”? In an in-person setting, I could have a bunch of low-key “this is nuts, right?” conversations with coworkers in the break room or hallways, but without that kind of casual interaction, I’m not sure how to get a group together to push back.

I don’t think cameras on for one hour-long meeting a week is outrageous, and if you frame it to people as anything in the neighborhood of “literal torture” you’re likely to lose a lot of credibility.

Requiring 60+ people have mics on is bizarre. But that part is likely to be rescinded pretty quickly because that much background noise (as well as sipping drinks, clearing throats, etc.) is going to be chaos with so many people.

We can talk about how to generate support for pushing back as a group when you’re remote, but I don’t think this is the issue to organize around.

As for how you’d do it on something else, though:

* Ideally, before you ever need to push back as a group, you’ve put some energy into forming relationships with your coworkers. You don’t have to do that — if you haven’t, you can still raise the topic when you’re talking to someone about something work-related — but it’s a lot easier if you’ve laid that groundwork first.

* Then, when you’re talking to people, you bring up the issue that’s bothering you: “What do you think about X? I’m worried because of Y.” You feel them out and if they sound like they share your concern, you can say, “I might talk to a few others and see if other people have these concerns. If they do, maybe we can talk about it with Manager.” From there, you’d follow the rest of the advice in this post about speaking up as a group — meaning that you could decide to raise it at a team meeting and have multiple people chime in, or you could ask your boss for a group meeting specifically to talk through questions people have, or you could decide that you’ll each bring it up individually with your manager. (But as discussed in that post, it usually does not make sense for one spokesperson to raise it on everyone else’s behalf. That’s likely to be less effective, and you might find others don’t then back you up as staunchly as they let you believe they would.)

* Sometimes, too, you can just speak up in a meeting where the topic is already getting discussed. For example: “I’m thinking about X — does anyone worry about how that will affect Y?” That’s a really low-key way to do it. You’re not showing up guns blazing, just raising a potential work problem and waiting to see if others join in on your concerns.

The post how do we push back as a group when we’re all remote? appeared first on Ask a Manager.

Daily Check-In

Mar. 4th, 2026 05:56 pmThis is your check-in post for today. The poll will be open from midnight Universal or Zulu Time (8pm Eastern Time) on Wednesday, March 04, to midnight on Thursday, March 05. (8pm Eastern Time).

How are you doing?

I am OK.

11 (52.4%)

I am not OK, but don't need help right now.

10 (47.6%)

I could use some help.

0 (0.0%)

How many other humans live with you?

I am living single.

8 (36.4%)

One other person.

11 (50.0%)

More than one other person.

3 (13.6%)

Please, talk about how things are going for you in the comments, ask for advice or help if you need it, or just discuss whatever you feel like.

Day 1870: “Why are we doing this?”

Mar. 4th, 2026 03:55 pm

Today in one sentence: The Republican-led House Oversight Committee voted to subpoena Attorney General Pam Bondi for a closed-door deposition about the Justice Department’s handling of records tied to Jeffrey Epstein; Senate Republicans rejected a war powers resolution to block Trump from ordering more strikes on Iran; Trump is “actively considering and discussing” America’s role in Iran after the war with his advisers and national security team; Texas state Rep. James Talarico won the Democratic primary for U.S. Senate in Texas; Republicans Sen. John Cornyn and Texas Attorney General Ken Paxton advanced to a May 26 Republican runoff; the Office of Congressional Conduct said it had “substantial reason to believe” Rep. Tony Gonzales, a Texas Republican, had a sexual relationship with a subordinate who later died by suicide; and 54% of voters disapproved of Trump’s handling of Iran, and 52% said the U.S. shouldn’t have taken military action.

1/ The Republican-led House Oversight Committee voted to subpoena Attorney General Pam Bondi for a closed-door deposition about the Justice Department’s handling of records tied to Jeffrey Epstein. Five Republicans joined Democrats to approve the subpoena after Rep. Nancy Mace said Bondi’s claim that the Justice Department released “all of the Epstein files” was “not” supported by the record. The department to date has released more than 3 million pages to comply with the Epstein Files Transparency Act, but the Justice Department has also acknowledged that it’s withholding millions more documents under claims of privilege. Lawmakers in both parties, however, have criticized the department’s process as both incomplete and sloppy, pointing to heavy redactions and episodes where victims’ information appeared in public releases. (NBC News / Politico / CNN / New York Times / CBS News / CNBC / The Hill / Associated Press)

2/ Senate Republicans rejected a war powers resolution to block Trump from ordering more strikes on Iran. The 53-to-47 vote was mostly along party lines, with Rand Paul the only Republican who supported the resolution. “This essentially is the vote whether to go to war or not,” Paul said. Democratic Sen. Chris Murphy added: “It is amazing to me that my Republican colleagues refuse to learn lessons. Six Americans have already died for an illegal war that nobody wants. […] And for what? We still don’t even know the reason for this war.” One Democrat, Sen. John Fetterman voted against it. A similar House vote is expected Thursday, which is also expected to fail. “As people see the consequences,” Democratic Sen. Tim Kaine said, “I think they may decide, ‘Why are we doing this?’” (Politico / Washington Post / New York Times / Associated Press / The Guardian)

3/ Trump is “actively considering and discussing” America’s role in Iran after the war with his advisers and national security team. Trump is also reportedly open to supporting armed Iranian opposition groups and the CIA is working to arm Kurdish forces based in Iraq. Both efforts are aimed at triggering mass protests to overthrow the regime after the U.S.-Israeli strikes killed Iran’s supreme leader, Ayatollah Ali Khamenei. White House press secretary Karoline Leavitt added that Trump “hasn’t ruled out” U.S. ground troops, but they “aren’t part of operational plans at this time.” (Wall Street Journal / Axios / CNN / NBC News / Bloomberg / Wall Street Journal / Associated Press / New York Times / The Hill / Reuters)

- A U.S. submarine torpedoed and sank an Iranian warship in the Indian Ocean near Sri Lanka. Defense Secretary Pete Hegseth called it the first U.S. submarine torpedo sinking of an enemy ship since World War II. (ABC News / CNN / New York Times)

- U.S. and Ecuadorian forces launched joint military operations in Ecuador against what U.S. Southern Command labeled “designated terrorist organizations.” The military released no details of the operations. (Politico / Axios / Bloomberg / The Guardian)

🔴🔵 PRIMARIES

4/ Texas state Rep. James Talarico won the Democratic primary for U.S. Senate in Texas, defeating U.S. Rep. Jasmine Crockett for the nomination. On the other side, Republicans Sen. John Cornyn and Texas Attorney General Ken Paxton advanced to a May 26 Republican runoff. Trump, who is expected to endorse Cornyn, posted on Truth Social that Republican primary race in Texas “cannot, for the good of the Party, and our Country, itself, be allowed to go on any longer. IT MUST STOP NOW!” He added: “The candidate that I don’t Endorse to immediately DROP OUT OF THE RACE!” Talarico would be the underdog against either Republican. Democrats haven’t won any statewide race in Texas since 1994. In North Carolina, former Democratic Gov. Roy Cooper and former Republican National Committee chair Michael Whatley won their primaries, locking in a matchup expected to be central to control of the Senate. In Texas, several majority-Latino counties cast more votes in the Democratic primary than voted for Kamala Harris in 2024, while in North Carolina the primary drew about 5% more voters than 2022 with Democrats casting about 200,000 more primary ballots than Republicans. (Associated Press / CNN / ABC News / Axios / The Hill / NBC News / Politico / Wall Street Journal / NPR / Politico / New York Times)

- Since the start of 2025, Democrats have flipped nine Republican-held state legislative seats in special elections, while Republicans have flipped zero Democratic-held seats. (NBC News)

5/ The Office of Congressional Conduct said it had “substantial reason to believe” Rep. Tony Gonzales, a Texas Republican, had a sexual relationship with a subordinate who later died by suicide. The matter was referred to the House Ethics Committee, which opened an investigation into whether Gonzales “engaged in sexual misconduct” toward a congressional employee and whether he “discriminated unfairly by dispensing special favors or privileges.” Gonzales, who is seeking reelection, is headed to a Republican runoff against Brandon Herrera, a right-wing YouTuber and gun-rights activist known as “the AK Guy.” Separately, House Republicans blocked Rep. Nancy Mace’s measure to force the public release of sexual misconduct and harassment by congressional lawmakers and aides. (Politico / NBC News / CNN / CNBC / CBS News / Washington Post / New York Times)

poll/ 54% of voters disapproved of Trump’s handling of Iran, and 52% said the U.S. shouldn’t have taken military action. 89% of Democrats and 58% of independents opposed the strikes, while 77% of Republicans supported them. Republicans who identified as MAGA backed the action 90%-5%, but non-MAGA Republicans were divided, 54%-36%. (NBC News)

The 2026 midterms are in 244 days; the 2028 presidential election is in 979 days.

- Today last year: Day 1505: "Very dumb."

- Two years ago today: Day 1140: "Such chaos."

- Five years ago today: Day 44: "No matter how long it takes."

- Six years ago today: Day 1140: "A perfect storm."

- Seven years ago today: Day 774: High crimes and misdemeanors.

- 9 years ago today: Day 44: Accused.

Support today’s essential newsletter and resist the daily shock and awe: Become a member

Subscribe: Get the Daily Update in your inbox for free

I love the World Baseball Classic

Mar. 4th, 2026 11:27 pmI listened to the Twins game against Puerto Rico this evening, which was happening while I was making dinner and at the gym.

I figured my Twinkies would get hammered; PR has lots of good players. But two of the best, Francisco Lindor and Carlos Correa, couldn't make the team for insurance reasons. Made me laugh that the lead-off hitter is another Minnesota Twin, Willi Castro. (Apparently he's not as good any more but I still have such a soft spot for him! There were other former Twins on this team too, Eddie Rosario is another that got mentioned fondly by the Twins radio guys, Kris and Dan.

The Twins actually won! 6-3. Good start by Zebby (phew), good game by Alan Roden (who I keep forgetting about; one of the many players they got in the fire sale last trade-deadline).